Struggling to get your AI agent production-ready?

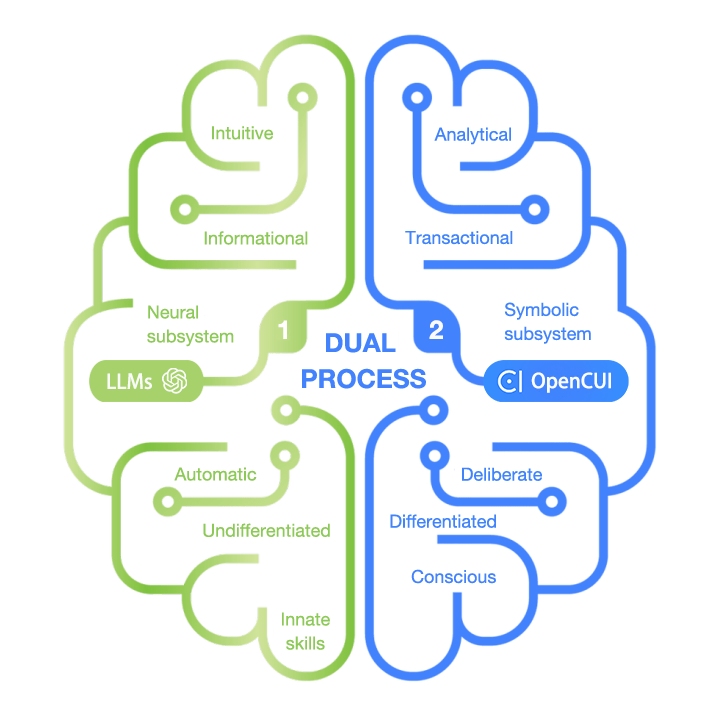

Augment prompting with SOLID software engineering for reliability and real business impact.

Augment prompting with SOLID software engineering for reliability and real business impact.

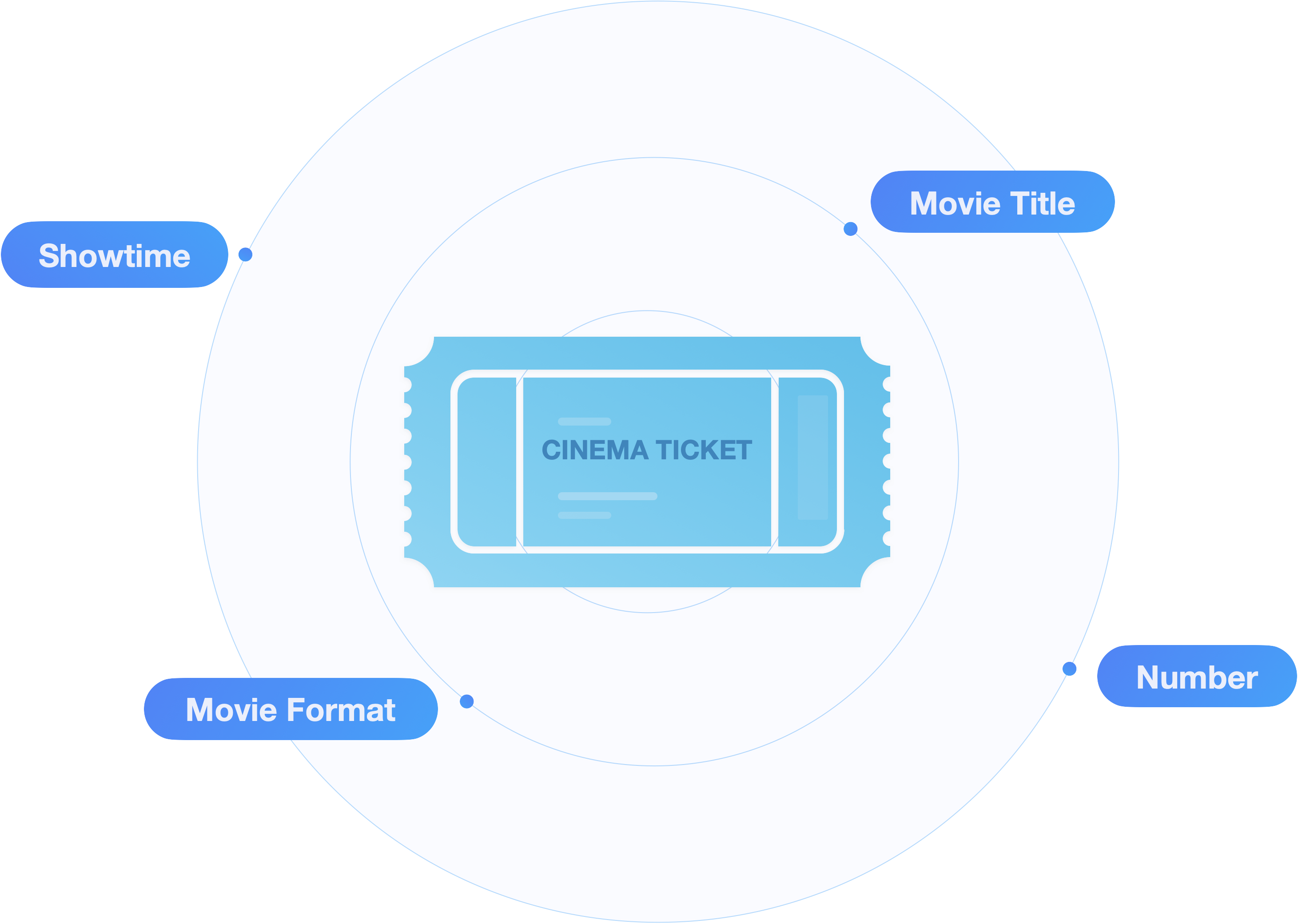

You build agents to serve users—so why focus on conversation flows? Just annotate your service schemas with the desired dialog behavior, and we’ll compile them into agents that can negotiate service details and deliver them reliably.

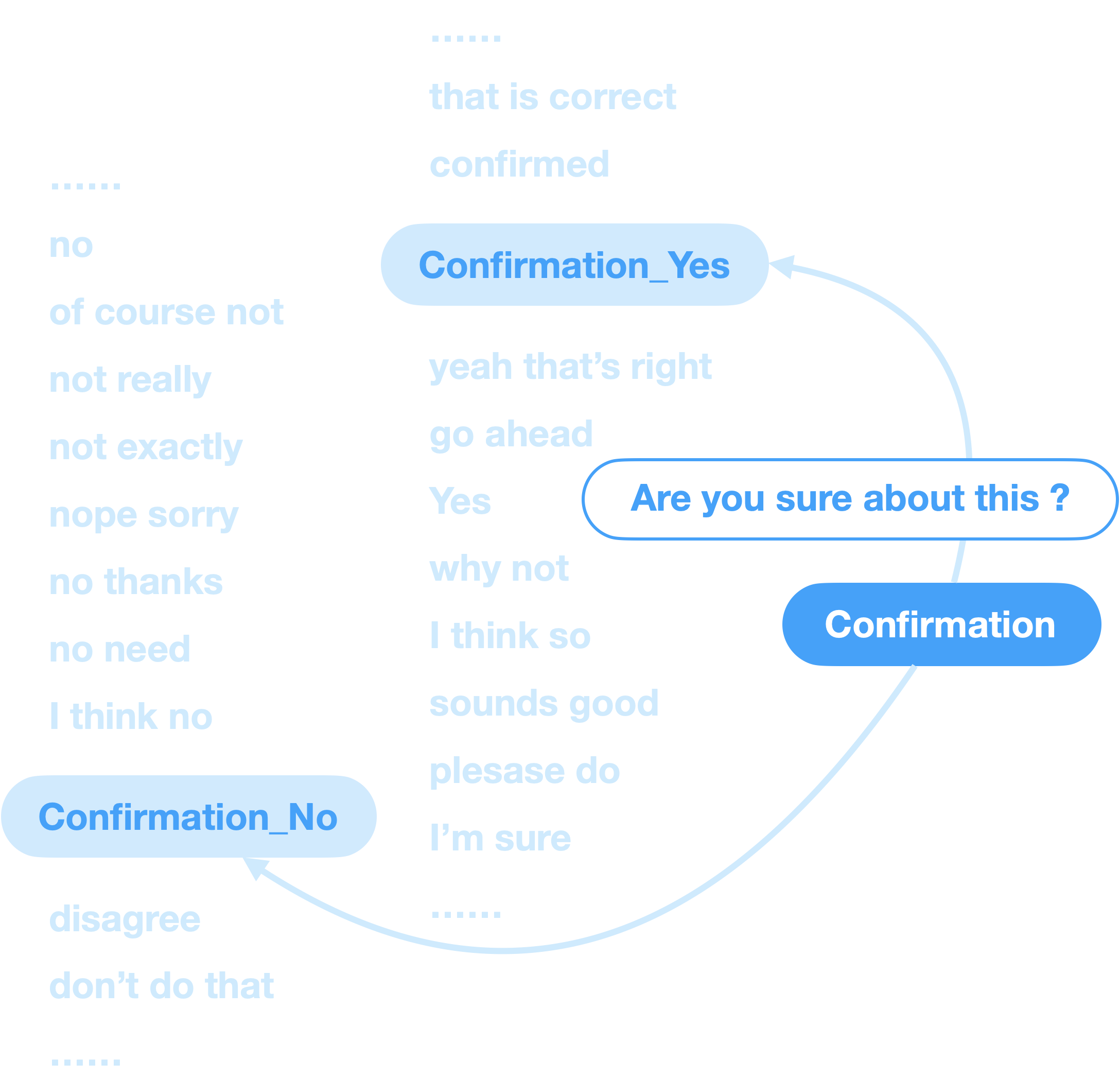

No LLM is perfect—so what happens when it fails? By decomposing dialogue understanding into subproblems, each solved with in-context learning with context-dependent examples, we make it easier to diagnose and fix issues, ensuring production-level accuracy.

Our declarative, low-code platform combines the flexibility of prompt engineering with the rigor of software engineering at skill level, enabling you to craft user-facing agents that are both reliable and cost-effective—designed with precision.

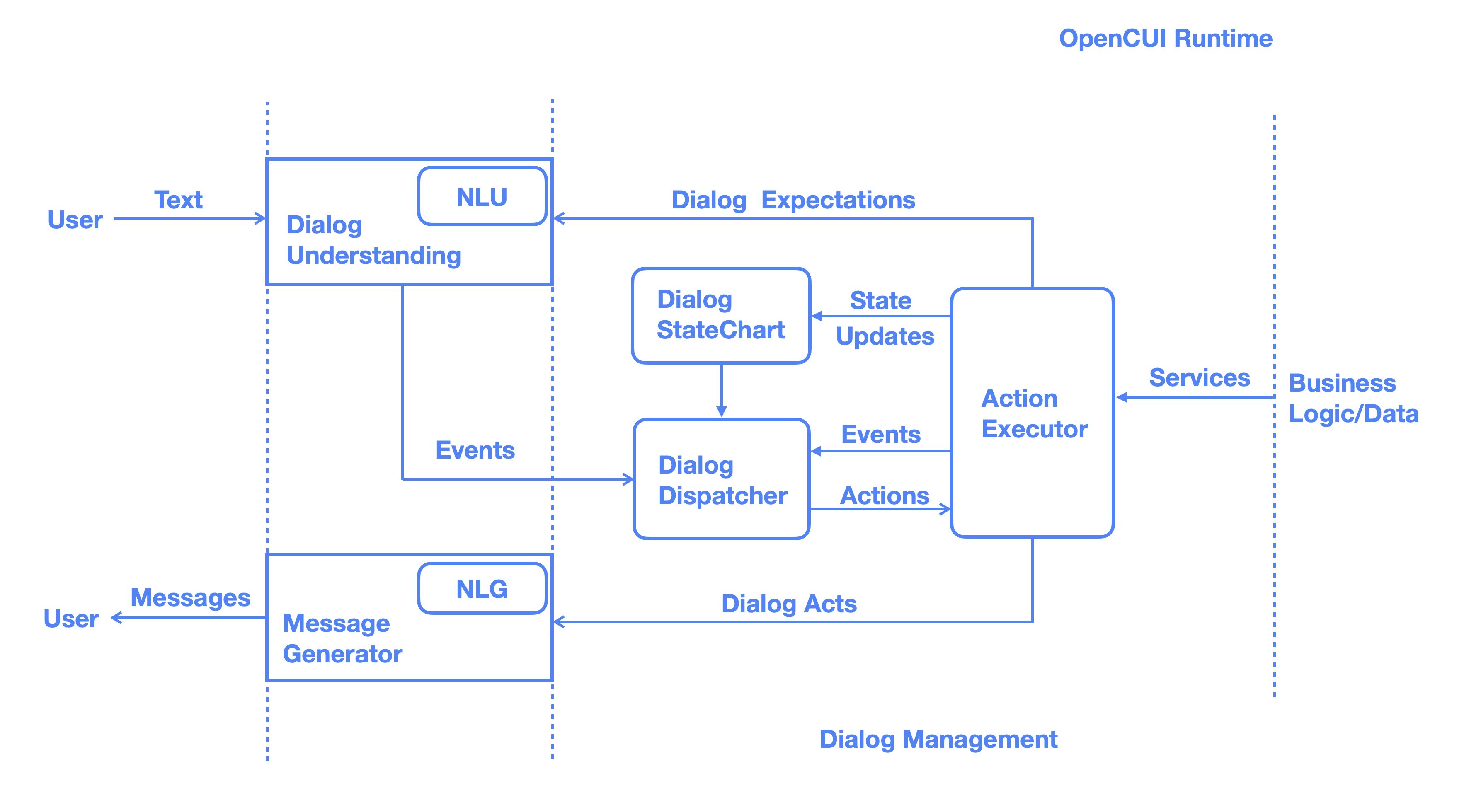

We separate language perception, interaction logic, backend services, and connection concerns—making it easy to support new languages and channels, and seamlessly integrate third-party tools, all within a scalable, component-based architecture.