System1: Leveraging LLMs in Conversational Skills

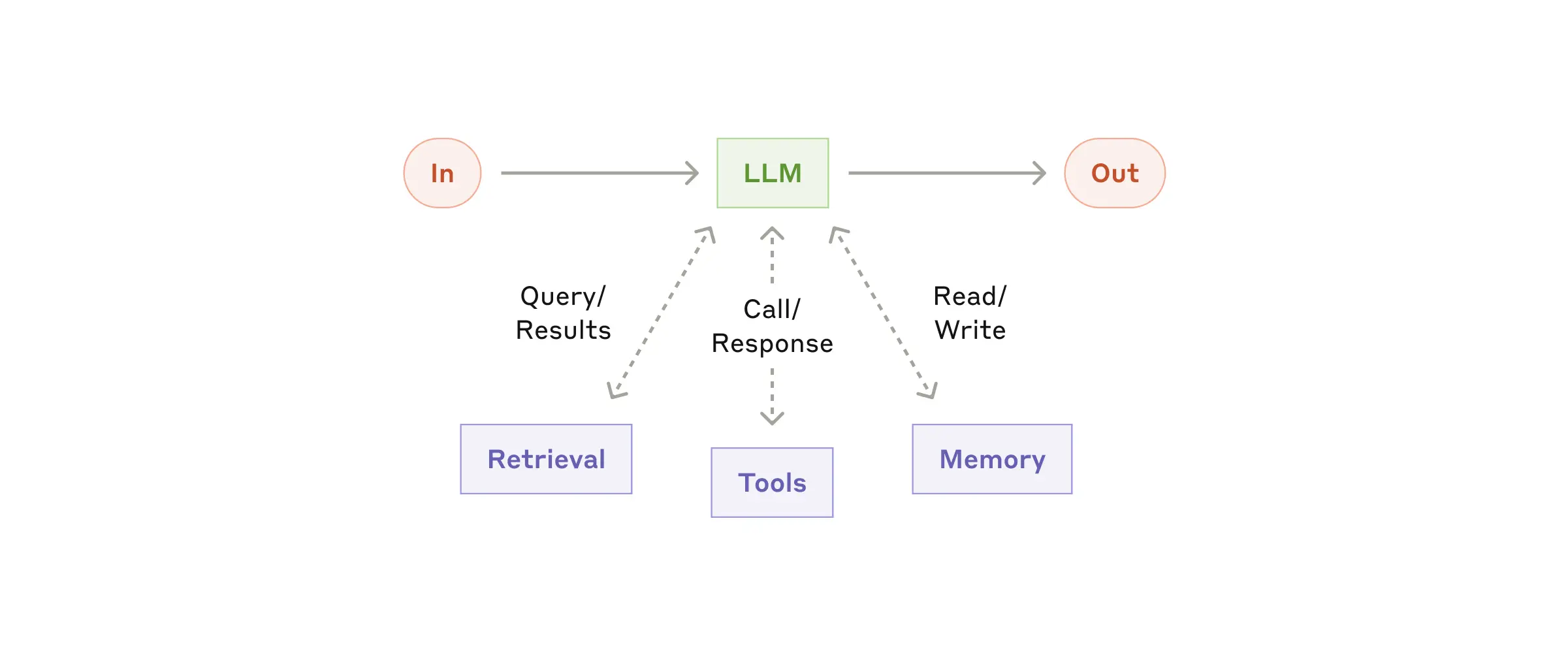

System1 is an approach to building conversational experiences that uses large language models (LLMs) to enhance flexibility, coverage, and naturalness. The most basic form of system1 is a LLM augumented with knowledge, tools, and memory, and its behavior is controlled by system prompt.

On this platform, prompt engineered system1 can be integrated in three distinct ways, each serving a unique purpose:

1. LLM-Based Function Implementation

- What it is: Use the LLM to perform internal logic or compute outputs that are returned as structured data.

- When it's used: Deterministically, as part of a skill's execution path.

- Why it's useful: Allows for implementing flexible logic or interpreting user input without hard-coding rules.

- Example: Mapping a user’s vague description of a travel plan into a structured itinerary object.

json

{

"destination": "Kyoto",

"start_date": "2025-04-30",

"end_date": "2025-05-04",

"interests": ["temples", "hot springs"]

}2. LLM-Based Action

- What it is: The LLM generates a natural language message to be shown to the user.

- When it's used: Deterministically invoked by the system when certain conditions are met.

- Why it's useful: Makes responses feel fluid and personalized without hand-authoring every message.

- Example: “Your table is reserved for 7 PM at Sushi Den. Enjoy your evening!”

3. LLM-Based Fallback

- What it is: A last-resort natural language response generated by the LLM, can be accessed in Frame's interaction tab.

- When it's used: Non-deterministically, only when the system cannot match the user input to any known intent, skill, or handler.

- Why it's useful:

- Catches unexpected inputs.

- Prevents user frustration with dead-ends.

- Maintains a sense of flow and engagement.

- Example: “Hmm, I didn’t catch that. Can you rephrase or tell me more about what you’re trying to do?”

Key Benefits of System1

- Graceful degradation: The user still gets a relevant or helpful response even when the system doesn't "understand" in a deterministic sense.

- Increased coverage: Handles edge cases, slang, typos, or user deviations from expected patterns.

- Iterative learning: Insights from fallback usage can guide future training or refinement of structured skills.

- Scalability: Reduces the need for exhaustive scripting and rules. Lets you focus manual effort on high-value or high-frequency paths.

Summary

System1 unifies three LLM usage patterns to create expressive and resilient conversational experiences:

| Method | Trigger Type | Output Type | Use Case |

|---|---|---|---|

| Function Implementation | Deterministic | Structured Data | Logic, interpretation, transformations |

| Action | Deterministic | Natural Language | Fluent and adaptive user messaging |

| Fallback | Non-deterministic | Natural Language | Handling errors, edge cases, or unknown input |

By blending these approaches, prompt-engineered system1 can add to software software-engineered system2 for both precision and creativity in conversation design.